Bailey: The Personal (Music) Trainer

Research Project Developed for Spotify

January 2021 - May 2021

I designed the conversational flows and embodiment for Bailey, an anthropomorphized “personal music trainer”. Bailey learns autonomous vehicle riders’ relationships to music, then curates rewarding and intimate listening experiences.

Research Goal

How might we design a conversational agent that consistently curates highly satisfying music-listening experiences for autonomous vehicle riders?

Drivers place elevated importance on music in car rides but struggle to curate listening experiences because of the overwhelming set of music choices. A conversational agent could reduce stress, especially in AVs where riders can focus more on the conversation.

Solution Benefits

It reduces listener effort to zero in curating high-quality music recommendations

It deepens listeners' personal connection to music by facilitating music-related self-reflection

It teaches users about the elements they like in music to empower them in future discovery

My Role

UX Researcher + Conversation Designer

I designed and led novel research methods exploring users’ relationships to music and reactions to our WoZ prototypes.

I mapped out conversational flows and designed an anthropomorphized agent that encourages rider vulnerability and information-sharing.

Client

Spotify (Academic Project)

Collaborators

Project Timeline

After a diary study to identify highs and lows with music-listening today, we tested 12 ideas. We aligned on a single in-car experience that we tested with an immersive AV setup to create our personal music trainer.

Explore Current Relationships to Music

Diary Studies

What separates a good listening experience from a bad one?

We knew from our initial research that users’ music-listening experiences in cars weren’t meeting their expectations. But what did that mean? What expectations did users have from a truly satisfying listening experience?

To understand that, we conducted a diary study. We asked 6 participants to document every distinct music-listening experience they had over the course of three days, across various settings, contexts, and devices.

QUESTION:

How can we understand the specific elements that are critical to highly satisfying listening experiences?

IDEA:

Capture users’ music-listening sessions with a diary study and interview them on the differences between the good sessions and the bad onesSemi-Structured Interviews

We learned...

From follow-up interviews diving into their diary entries, we uncovered four key elements that together created transcendent music-listening experiences:

Users want music they can connect to on a deeper emotional or intellectual level

Listeners want end-to-end control because they don’t trust existing music platforms to find their preferred music for them

Users want to quickly and easily find music they know they’ll like, but usually can’t

Listeners define good music as music which helps them reach an aspirational or desired mood

Explore Possible Solutions

Storyboarding + Speed Dating

How could an agent fill the gaps in today’s listening experiences?

There were still three big hurdles to narrowing in on a single solution:

1

We needed to separate users’ perceived needs from their actual needs by seeing how receptive they were to potential solutions

2

We wanted to understand when users would be comfortable with a conversational agent (CA) to meet their needs

3

We didn’t know how proactive the agent should be, or how much data collection was permissible

I suggested storyboarding and speed dating as a path forward. We could test three drastically different ideas per identified need, varying them by the level of proactivity and data collected by the agent. This approach helped us narrow in on a need and agent role.

ISSUE:

We didn’t know which needs to focus on, or how comfortable users were with a CA

IDEA:

Use storyboarding and speed dating to identify the biggest pain points, and users’ reactions to a CA

We learned...

From 12 storyboards, we were able to identify some clear user preferences:

Listeners most wanted an agent that could curate music to the specific moods and preferences of the moment, outside of simple recommendations

Listeners reacted adversely to complex sensor technology that tried to infer their emotions or preferences

Listeners didn’t want to prescribe exactly what they wanted to an agent because of the perceived hassle and inconvenience

Remote Test an AV Experience

How can we curate the music while respecting privacy and minimizing user load?

Users wanted music that was emotionally resonant, but without having to dictate exactly what they wanted or have things sensed automatically.

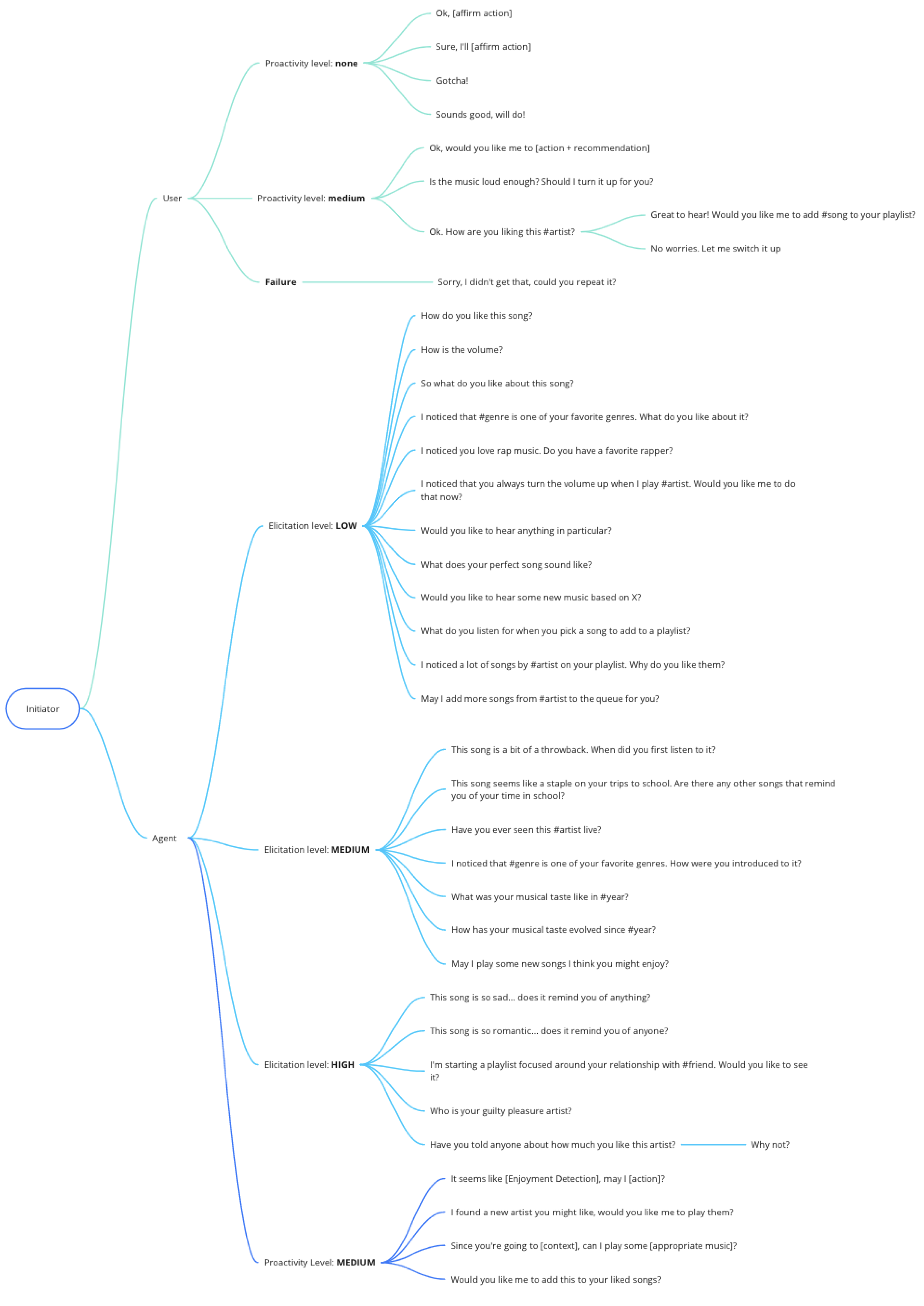

I reframed these constraints. What if our conversational agent learned about users’ musical tastes over time through simple conversation? The agent could then curate more personal, relevant music without users feeling exploited or creeped out.

CONSTRAINT:

Users wanted music that was more emotionally resonant, but didn’t want an agent that made them do all the work, or sensed things automatically

IDEA:

What if our agent mimicked humans, and learned more about musical tastes through back-and-forth, natural conversations?Literature Review

How do we test the scalability of our findings?

For this idea to work, our agent needed to make users feel comfortable sharing personal information about themselves and their connections to music.

We dove deep into literature on elicitation and vulnerability with non-human agents, and formed a few principles to guide agent conversation:

RESEARCH SAID:

SO WE:

People build trust gradually as they are more vulnerable, and will respond adversely to personal questions asked prematurely

Begin interactions with “low-elicitation” questions, and slowly become more personal as users open up

Users prefer and trust digital assistants that mirror their level of “chattiness”

Adapt the number of questions asked based off how talkative the user is

Digital assistants that express vulnerability of their own (e.g., sorry for the mistake, I was rewired this morning) increase user trust

Insert moments of agent vulnerability when making a mistake or when facing knowledge gaps

Wizard of Oz (WoZ) Prototyping + Remote Usability Testing

How could we test a CA under time and resource constraints?

We were dealing with a few constraints when it came to prototyping our imagined agent, which we had to solve in imaginative ways:

ISSUE:

FIX:

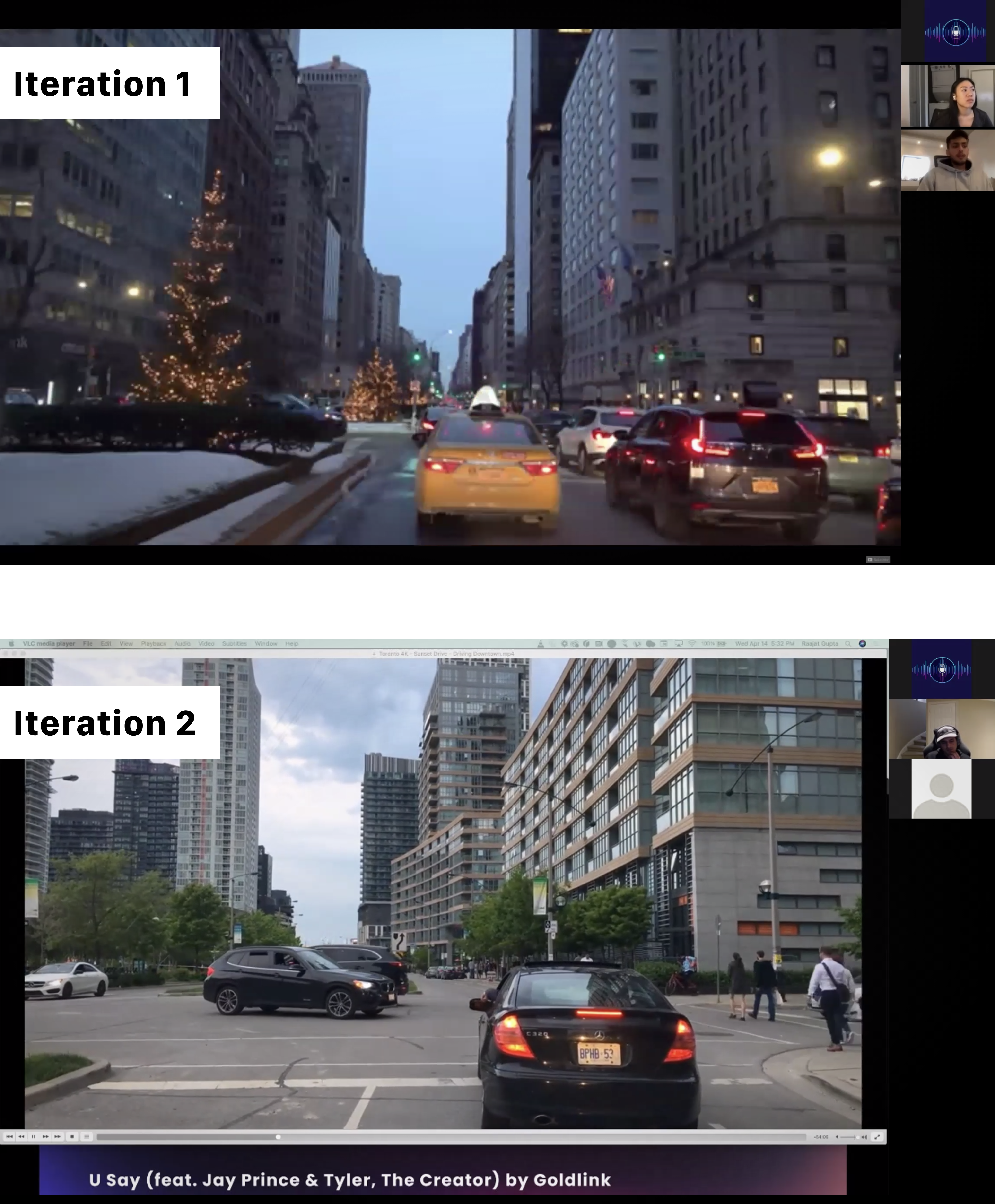

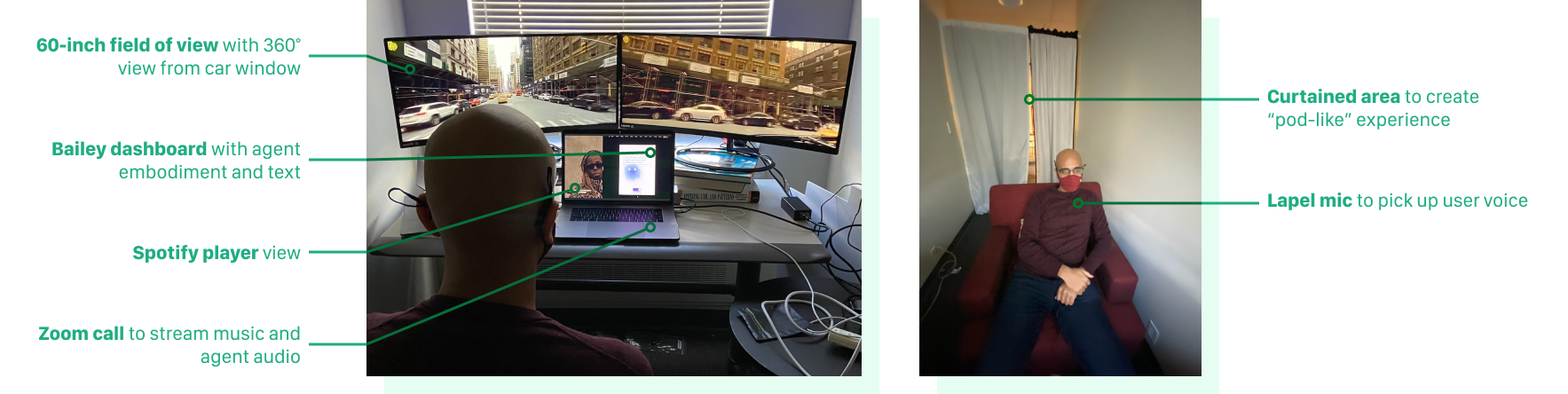

To remain safe with COVID-19, we couldn’t build any in-person or in-car prototypes

Simulate an in-car experience by playing POV driving footage for participants, streamed over Zoom

We didn’t have the time to build a functioning CA as complex as we needed it to be

Leverage a WoZ prototype with text-to-speech conversion of whatever we input

Our agent’s value would only become clear over time, as it learned more about user tastes

Schedule 3 30-minute sessions, so the agent can learn user preferences over time

Posing as an agent, we played music for participants and asked questions to learn more about their tastes and personal connections.

By learning more after each session, we were able to delight users with highly personal, emotionally resonant music by the third session.

We learned...

We had 9 participants go through our 3x30min study, and emerged with four critical learnings:

Listeners felt the conversational agent enriched their music experience and led to better music with less effort

Listeners didn’t feel totally comfortable sharing their more personal perspectives because the agent felt too robotic and alien

Listeners were unable to take the AV experience completely seriously because the prototype felt too much like sitting at their computer screen

Listeners loved the agent sharing its own perspectives on how it understood users’ connections or what elements of the music users loved most

Usability Test an Immersive AV Experience

Evidence-Based Design

How do we instill listener trust and openness?

Users still felt uncomfortable getting too personal with a “robot”. We turned to our study’s findings and additional research for solutions:

FINDING:

IDEA:

Users were more comfortable when the agent provided its own perspective and felt more “human”

What if we restructured agent recommendations as opinions, and used a more friendly, humorous personality?

Agent embodiment increases initial trust, especially before an agent’s competence is understood

Pair the agent’s friendly voice with a cute, friendly face and name (Bailey) that makes it feel more lifelike

Users were far more comfortable with the agent once they understood how it was using their information to recommend better music

Introduce Bailey through an onboarding flow that also explains how conversation fuels better music

Wizard of Oz (WoZ) Prototyping + Usability Testing

How do we create an immersive experience?

As COVID-19 restrictions were lifted, our team had more options on how to conduct an immersive study. Users were still uncomfortable in extremely closed quarters like a car, so we improvised by building our own autonomous vehicle:

Users’ experiences were far smoother. They were more vulnerable, which helped make their experience and realistic more enjoyable in turn.

Final Value Proposition

Bailey curates deeply personal music for listeners in autonomous vehicles by learning more about users’ relationship to music and the specific elements they like in songs. Bailey also facilitates self-reflection on musical tastes and educates users on musical elements.

Curate More Personal Musical Moments

Explores why people like music (e.g., the voice, beat, lyrics, instruments) to get a more accurate picture of users’ preferences.

Learns users’ relationships to the music including associations to their past, other people, or personal connections to create more powerful playlists or “time capsule” moments.

Reduce Listener Effort to Zero

Curates the end-to-end listening experience by using conversational cues to play music that matches what users actually want in the moment

Builds listener trust over time with a friendly personality and by only recommending foreign music after users’ tastes are adequately understood

Improve Listening Experiences Beyond the Vehicle

Integrates listener preferences after their ride with customized recommendations on their Spotify pages that tie to their relationships, personal connections, and song element preferences

Teaches listeners about their own tastes by identifying user-liked music elements and microgenres, empowering listeners in their future music discovery.

Results & Final Thoughts

Bailey's role as a personal music trainer opens up new opportunity spaces for how a conversational agent can enrich listeners' lives, which I'll be exploring further.

The personal (music) trainer

Although users wanted Bailey to help curate better recommendations, one of our most surprising findings was its role as a teacher:

THE PERSONAL MUSIC TRAINER:

Learn users’ musical tastes and connections to music. Then teach them about musical elements and facilitate a deeper reflection on their personal connections to music.I'll be continuing research as part of a broader team that will be diving into the interaction design of simultaneously learning and teaching listeners about their tastes.

Near-zero effort needed to get high-quality music recommendations

Deepen their own personal connection to music through self-reflection

Identify liked musical elements to empower them in future discovery

Increased user sign-up & retention from a superior recommendation system

Increased active users as car rides become immersive music experiences

Rich data source on user connections, tastes, and personal preferences